Continuing on the Data warehouse article series, this is the next article, Data Warehousing Best Practices for SQL Server at www.mssqltips.com. I have updated the list of articles as well at Data is everywhere, but?: All Data Warehouse Related Articles.

Data is everywhere, but?

Translate

Saturday, January 6, 2024

Saturday, December 9, 2023

All Data Warehouse Related Articles

Writing is my passion. Writing has opened me many avenues over the years. Thought of combining all the data warehouse related article into a one post in different areas in data warehousing.

DESIGN

What

is a Data Warehouse? (mssqltips.com)

Things

you

should avoid when designing a Data Warehouse (sqlshack.com)

Infrastructure

Planning for a SQL Server Data Warehouse (mssqltips.com)

Why

Surrogate Keys are Needed for a SQL Server Data Warehouse (mssqltips.com)

Create

an Extended Date Dimension for a SQL Server Data Warehouse (mssqltips.com)

SQL

Server Temporal Tables Overview (mssqltips.com)

Data Warehousing Best Practices for SQL Server (mssqltips.com)

• Testing

Type 2 Slowly Changing Dimensions in a Data Warehouse (sqlshack.com)

• Implementing

Slowly Changing Dimensions (SCDs) in Data Warehouses (sqlshack.com)

• Incremental

Data

Extraction for ETL using Database Snapshots (sqlshack.com)

• Use

Replication to improve the ETL process in SQL Server (sqlshack.com)

• Using

the SSIS Script Component as a Data Source (sqlshack.com)

• Fuzzy

Lookup Transformations in SSIS (sqlshack.com)

• SSIS

Conditional Split overview (sqlshack.com)

• Loading

Historical Data into a SQL Server Data Warehouse (mssqltips.com)

• Retry

SSIS Control Flow Tasks (mssqltips.com)

• SSIS

CDC Tasks for Incremental Data Loading (mssqltips.com)

• Multi-language

support for SSAS (sqlshack.com)

• Enhancing

Data Analytics with SSAS Dimension Hierarchies (sqlshack.com)

• Improve

readability with SSAS Perspectives (sqlshack.com)

• SSAS Database

Management (sqlshack.com)

• OLAP Cubes in SQL

Server (sqlshack.com)

• SSAS

Hardware Configuration Recommendations (mssqltips.com)

• Create

KPI in a SSAS Cube (mssqltips.com)

• Monitoring SSAS with Extended Events (mssqltips.com)

SSRS

• Exporting

SSRS reports to multiple worksheets in Excel (sqlshack.com)

• Enhancing

Customer Experiences with Subscriptions in SSRS (sqlshack.com)

• Alternate Row

Colors in SSRS (sqlshack.com)

• Migrate On-Premises SQL Server Business Intelligence Solution to Azure (mssqltips.com)

Other

• Dynamic

Data Masking in SQL Server (sqlshack.com)

• Data

Disaster Recovery with Log Shipping (sqlshack.com)

• Using

the SQL Server Service Broker for Asynchronous Processing (sqlshack.com)

• SQL

Server auditing with Server and Database audit specifications (sqlshack.com)

• Archiving

SQL Server data using Partitions - SQL Shack

• Script

to Create and Update Missing SQL Server Columnstore

Indexes (mssqltips.com)

• SQL

Server Clustered Index Behavior Explained via Execution Plans (mssqltips.com)

• SQL

Server Maintenance Plan Index Rebuild and Reorganize Tasks (mssqltips.com)

• SQL

Server Resource Governor Configuration with T-SQL and SSMS (mssqltips.com)

Friday, September 1, 2023

Dataset : T20 Outcome of Extra Delivery & Free Hit

Wednesday, August 23, 2023

Violin Plot - Compare Data

Contrasting and comparing are part of data analysis to make vital decisions. A violin chart integrated with Box Plot is one of the charts that can be used to compare data. Let us see how we can utilize the Orange data mining tool to compare data using the Violin chart.

Let us assume that the following is the dataset that we need to compare.

Monday, August 21, 2023

How Data Science Project Works - From the Koobiyo Teledrama

About five years ago, the Koobiyo teledrama was very popular due to the uncharacteristic nature of the teledrama. It was a political teledrama, one reason the teledrama became popular. However, this post is not to discuss the political side of the tele drama but to discuss the data science side of it.

Tuesday, August 15, 2023

Article : Learn about Data Warehousing for Analytical Needs

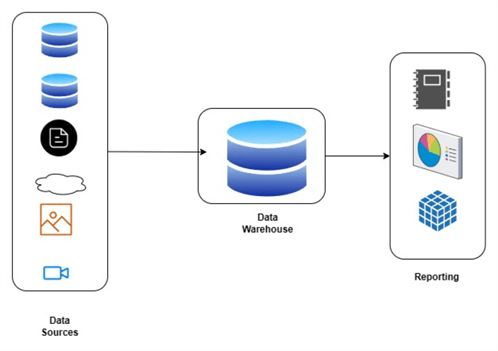

I started another article series at www.mssqltips.com on data warehousing. Even though there are many tools to build data warehouses, I still find there is a lack of conceptual knowledge in the community. So trying to cover that gap. So, read this What is a Data Warehouse? article and send your comments.

Sunday, July 30, 2023

Who is a Data Scientist? A Dragon a Pegasus or a Unicorn?

As per the song, the dragon is a combination of

·

trunk of an elephant

·

legs of a lion

·

ears of a pig

·

teeth of a crocodile

·

eyes of a monkey

·

body of a fish

·

wings of a bird

The combination of these most strengthened parts will make the dragon a strong animal to achieve his required tasks.

Saturday, July 29, 2023

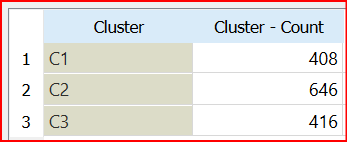

Clustering as Pre-Processing Technique for Classification

Clustering is often used to identify natural groups in a dataset. Since the clustering technique does not depend on any independent variable, the clustering technique is said to be unsupervised learning. The classification technique is supervised as it models data for a target or dependent variable. This post describes clustering as a pre-processing task for classification. This post has used the Orange Data Mining tool to demonstrate the above scenario.

Following is the complete orange data mining workflow, and this is available in the Git Hub as well.

Wednesday, April 5, 2023

Is GPT-4 a Job Destroyer?

With rise of GPT-4, many jobs will be on the firing line. For example, with the expectation of 10% letter writing will be done with the use of GPT-4, job of secaratries are in danger. What about the software developers.You you can upload a image and get the source code from the GPT-4!!!

Wouldn't that be the same fear we had when the computers were introduced. Being in the Software field in early 1990s in Sri Lanka, I still remember how much pressure we had from many employees when we try to install new software applications. During most of the product demostration, we used to conclude our sessions by addressing that fear. We said that new software system will not destroy any jobs but instead it will create new jobs. Isn't that true? If I look back the those industries back, yes some jobs have gone for good, but there are new jobs.

Let us look at Cricket. During 1980's there were only a couple of camera for TV coverage. With the improvement in technology, now there are many cameras including spider, stump, drone etc. With these technologies, viewship has increased and more revenue avenues are being created. Further, cricket has become more glamoours and into a new world. With that new jobs are created and the good example is the third umpire.

Same will be inline for GPT. Let us wait and see in time to come.

Monday, December 12, 2022

2nd Evaluation of Oxford Mathematical Model during the Knockouts

Let us analysis the stage wise accuracy rates.

Problem with this accuracy calculation is that the error is propagated. Since Oxford predicted that the Belgium will feature in the final in which they were out of the competition from the first round, that is reflected at every stage.Would you accept this model with just over 50% of accuracy. That means it is just over the ad-hoc probability prediction. As we have been insisting, predicting the results outcome of a sport event, is not yet successful, it least for the moment.